A brief overview of the latest in EU AI regulations, and what it means for professionals

Artificial intelligence (AI) is transforming the way we work, communicate, and interact with each other. It can also pose significant challenges and risks to our safety, privacy, and fundamental rights.

In April 2021, the European Commission proposed the first EU regulatory framework for AI. It says that AI systems that can be used in different applications are analysed and classified according to the risk they pose to users. The different risk levels will mean more or less regulation. As part of its digital strategy, the EU wants to regulate AI to ensure better conditions for the development and use of this innovative technology, which can benefit many.

In late 2023, the European Union (EU) proposed a landmark legislation to regulate the use of AI, known as the Artificial Intelligence Act, or EU AI Act. The Act aims to foster the development and adoption of trustworthy and human-centric AI across the EU, while ensuring that high-risk AI systems are subject to strict rules and oversight.

In early August of 2024, the act entered into force, becoming the world’s first comprehensive regulation for AI.

Jump to:

| What is the Artificial Intelligence Act? |

| The AI Act’s risk-based approach |

| What does the AI act mean for professionals? |

What is the Artificial Intelligence Act?

The AI Act is a comprehensive legal framework that covers the entire lifecycle of AI systems, from their design and development to their deployment and use. It defines AI as “software that is developed with one or more of the techniques and approaches listed in Annex I and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with”. The techniques and approaches listed in Annex I include machine learning, logic and knowledge-based approaches, statistical approaches, and combinations thereof.

The goal of the act is to help regulate AI based on its potential to cause harm to society using a ‘risk-based’ approach: the higher the risk, the stricter the regulations. This is the world’s first legislative proposal of its kind – which means it can establish a global standard for AI regulation in other jurisdictions and promote the European approach to technology regulation around the world.

EU Commissioner Thierry Breton described the plans as “historic”, saying they set “clear rules for the use of AI”. He added that it is “much more than a rulebook – it’s a launch pad for EU start-ups and researchers to lead the global AI race”.

With the AI Act now in force, any company that provides products or services in the EU falls into the scope of the law. Failure to adhere can cause fines of up to 7% of annual global turnover.

The AI Act’s risk-based approach

The AI Act classifies AI systems into categories based on different risk levels:

Unacceptable risk

AI systems that are considered to be contrary to the EU’s values and principles, such as those that manipulate human behaviour or exploit vulnerabilities. These AI systems are banned in the EU. Some examples would be voice-activated children’s toys that encourage threatening behaviour or social scoring.

High risk

AI systems that are used in critical sectors or contexts, such as health care, education, law enforcement, justice, or public administration. These AI systems are subject to strict obligations, such as ensuring data quality, transparency, human oversight, accuracy, robustness, and security. They also need to undergo a conformity assessment before being placed on the market or put into service.

Limited risk

AI systems that pose some risks to the rights or interests of users or other affected persons, such as those that use remote biometric identification (such as facial recognition) in publicly accessible spaces. These AI systems are subject to specific transparency requirements, such as informing users that they are interacting with an AI system and providing information about its purpose, capabilities, and limitations. Examples of these are systems that generate images or audio.

Minimal risk

Minimal risk refers to AI applications that are considered to pose little to no threat to citizens’ rights or safety. These applications, such as spam filters, can be deployed without strict regulatory requirements, allowing for easier integration into the market.

Generative AI

Generative AI, such as ChatGPT, that creates content such as text, images, or videos, will need to follow transparency requirements, such as:

- Stating that the content is not human-made, but AI-generated.

- Being designed in a way that prevents it from producing content that violates the law, such as hate speech, defamation, or incitement to violence.

- Respecting the intellectual property rights of the data sources that are used to train the model and provide summaries of the copyrighted data.

What does the AI Act mean for professionals?

The AI Act will have significant implications for professionals who use AI in their work, especially those who are involved in high-risk AI systems. Professionals will need to comply with the obligations and requirements set out in the AI Act, such as ensuring data quality, transparency, human oversight, accuracy, robustness, and security of their AI systems.

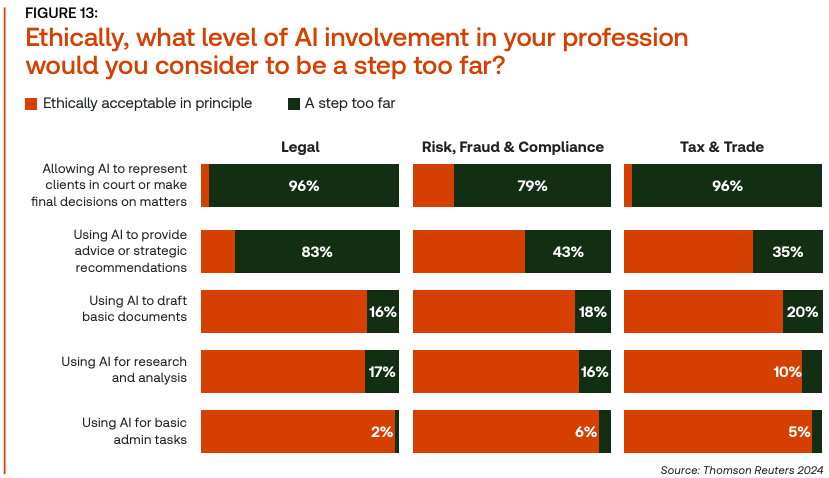

In the 2nd annual Future of Professionals report from the Thomson Reuters Institute, professionals seem to be divided on what constitutes “a step too far” for AI use, ethically speaking. Among the biggest questions surrounding the use of AI-powered technology in professional settings is, “What does AI regulation & responsible use look like?”.

The AI Act will create new opportunities and benefits for professionals who use AI in their work. By setting clear and harmonised rules for the use of AI in the EU, the AI Act will create a level playing field and a single market for AI, facilitating cross-border trade and innovation. It will also enhance trust and confidence in AI, both among users and consumers, and among public authorities and regulators.

“The future of AI is not predestined – it’s ours to shape,” Steve Hasker, president and CEO of Thomson Reuters recently told ComputerWeekly.com.

“It is our duty to build our AI applications responsibly and ethically. We must insist that the same ethics that have long governed legal, tax and accounting, and other knowledge-industry professions also must inform and inspire the professional use of AI.”

We have a long history of innovation, starting in the 1800s, when we used technology to collect and organise information for our customers. Over time, we have incorporated AI to help customers find the information they need with speed and confidence.

|

Never miss an update on AI

Sign up for a monthly e-newsletter for insights, updates, and all things AI @ Thomson Reuters. |